All published articles of this journal are available on ScienceDirect.

Wildfire CNN: An Enhanced Wildfire Detection Model Leveraging CNN and VIIRS in Indian Context

Abstract

Introduction

Wildfires are an unexpected global hazard that significantly impact environmental change. An accurate and affordable method of identifying and monitoring on wildfire areas is to use coarse spatial resolution sensors, such as the Moderate Resolution Imaging Spectroradiometer (MODIS) and Visible Infrared Imaging Radiometer Suite (VIIRS). Compared to MODIS, wildfire observations from VIIRS sensor data are around three times as extensive.

Objective

The traditional contextual wildfire detection method using VIIRS data mainly depends on the threshold value for classifying the fire or no fire which provides less performance for detecting wildfire areas and also fails in detecting small fires. In this paper, a wildfire detection method using Wildfiredetect Convolution Neural Network model is proposed for an effective wildfire detection and monitoring system using VIIRS data.

Methods

The proposed method uses the Convolutional Neural Network model and the study area dataset containing fire and non-fire spots is tested. The performance metrics such as recall rate, precision rate, omission error, commission error, F-measure and accuracy rate are considered for the model evaluation.

Results

The experimental analysis of the study area shows a 99.69% recall rate, 99.79% precision rate, 0.3% omission error, 0.2% commission error, 99.73% F-measure and 99.7% accuracy values for training data. The proposed method also proves to detect small fires in Alaska forest dataset for the testing data with 100% recall rate, 99.2% precision rate, 0% omission error, 0.7% commission error, 99.69% F-measure and 99.3% accuracy values. The proposed model achieves a 26.17% higher accuracy rate than the improved contextual algorithm.

Conclusion

The experimental findings demonstrate that the proposed model identifies small fires and works well with VIIRS data for wildfire detection and monitoring systems.

1. INTRODUCTION

Natural and human activity may cause a wildfire and humans are responsible for 90% of wildfires, with natural causes accounting for the remaining 10% [1]. Wildfires have an impact on biodiversity, local and global ecosystems, degraded forests, plant and animal extinction, and climate change. The development of an efficient wildfire detection system improves life safety and aids in averting repercussions [2]. Satellite remote sensing is widely used for detecting and characterizing active wildfires. Polar-orbit satellites have better in the high latitudes by orbits converge about the poles and the imaging frequency on the earth's surface when compared to Geostationary satellites. The Geostationary satellites provide high temporal resolution and are commonly used for real time wildfire detection [3]. However, the Visible Infrared Imaging Radiometer Suite (VIIRS) and Moderate Resolution Imaging Spectroradiometer (MODIS) data give higher spatial resolution and nighttime detection, building up the geostationary data with detailed observations.

Satellite multispectral imaging sensors like VIIRS and MODIS have been used in wildfire detection systems. The infrared radiation released by burning biomass is utilized in active fires of satellite remote sensing data. In order to identify the image pixels whose shape on the earth's surface carry an active combustion, sensors locate the fire's radiation in the electromagnetic spectrum, such as the shortwave, mid, and thermal infrared areas. NASA’s MODIS and VIIRS are the most widely used operational fire detection products with sensors on polar-orbiting satellites. MODIS detects fires using a contextual algorithm in 1-km pixels, firing at the time of pass over under no smoke conditions [4].

| Items | Modis | Viirs |

|---|---|---|

| Sensor | 36 spectral bands | 16 moderate resolution bands (M-bands), 5 HR imagery bands (I bands), day (M13) and night bands (M15) |

| Satellite | Aqua and Terra | Soumi National Polar-orbiting Partnership |

| Fire detection algorithm | Contextual | Thresholding and Contextual |

| Equatorial Pass | Terra (10:30 am and 10:30 pm)Aqua (1:30 pm and 1:30 am) | 1:30 pm and 1:30 am |

| Resolution | 1 km X 1 km | 375m x 375 m & 750m x 750 m |

| Night time performance | Bad | Good |

| Accuracy for finding small fires | No | Yes |

| Canopy fire detection | Bad | Good |

VIIRS equipment is placed in National Oceanic and Atmospheric Administration (NOAA-20) and Suomi National Polar orbiting Partnership (SNPP) and weather satellites for monitoring the weather. VIIRS sensor manufactured and designed by Raytheon company and gives the highest spatial resolution by collecting imagery and radiometric measurements such as atmosphere, land, cryosphere and oceans using visible and infrared bands of electromagnetic spectrum [5].

Since 2004, Forest Survey has been cautioning State Forest Department of wildfire locations identified by MODIS sensors on board Aqua and Terra Satellites of NASA. Initially, fire locations detected by the satellites were transmitted through Fax and later through SMS and email with advancements in communication technology. In India, Forest and Climate Change launched Fire Alert System (FAST) from the Ministry of Environment in January 2019 to monitor wildfires using real-time information from VIIRS. The main characteristics of this system are (i) wildfire alerts with high resolution of 375m x 375m VIIRS, (ii) process automation, (iii) customized alerts, (iv) improved user experience, (v) state nodal officers control panel [5]. Table 1 provides the comparison between MODIS and VIIRS sensors.

From Table 1, The VIIRS offers data on the geographical coordinates, brightness temperature, time of day, satellite view, Fire Radiative Power (FRP), solar zenith angles and detection confidence (low, nominal, high) [5]. In order to give higher spatial resolutions, indicate smaller pixel areas, and enhance the possibility for active fire detection above MODIS, VIIRS incorporates two middle-wave infrared bands. The VIIRS sensor resolution is finer than MODIS, the purpose of covering the entire area several times a day at a low cost to detect small fires. The following are some key distinctions between VIIRS and MODIS:

i. VIIRS observation is three times more detailed than MODIS fire observation

ii. Data reduction steps like onboard pixel aggregation scheme for VIIRS to cut down the pixel size gain have not been used in MODIS.

iii. The VIIRS identifies small fires and reattains the FRP more easily than MODIS.

iv. Insensitivity to small fires by MODIS can lead to the deviation of fire emission estimation.

The detection of active wildfires in target area using satellites timely information about the wildfire is one critical area. The conventional contextual fire detection approach, which makes use of VIIRS data, has a high percentage of missing fire spots, is less accurate, and unable to identify small fires. The main goal of this research is to develop a wildfire detection method using Wildfiredetect Convolutional Neural Network (Wildfire- detect CNN) method to provide improved accuracy and lower omission error on VIIRS data in India, since VIIRS data provides an accurate and cost-effective solution in detecting and monitoring wildfire areas. The ability to accurately identify fire spots using remote sensing data improves post-wildfire operations, decision support for wildfire management, and wildfire prevention.

The existing wildfire detection algorithms have some key limitations such as providing false wildfire detection, low accuracy in detecting wildfires, misinterpreted fires, failure in small fire detection and reducing detection accuracy due to weather conditions etc. To overcome these challenges, efficient wildfire detection algorithms needs to be developed for wildfire detection and monitoring systems.

The summarization of our contribution as follow:

- The new wildfire detection using Wildfiredetect CNN is proposed for effective wildfire detection on VIIRS data in India.

- The proposed Wildfiredetect CNN method is developed and tested with the fire/non fire spots dataset.

- The various performance metrics such as recall rate, precision rate, omission error, commission error, F-measure and accuracy rate are considered for the evaluation of the proposed model with the existing method.

Research paper sections are arranged in the following order. Section 2 elaborates the related work done on the contextual method and machine learning based wildfire detection algorithm. In section 3, the proposed Wildfiredetect CNN method for active fire detection is presented. Section 4 provides a detailed study area and the experimental setup conducted based on the evaluation metrics are analyzed in section 5. Conclusion and further work were depicted in section 6.

2. RELATED WORK

Satellite remote sensing for wildfire detection combines temperature brightness variations in the visible and near-infrared bands with thermal infrared band reflection to identify fires. Recently, several researchers have employed data from two distinct polar orbiting instruments - VIIRS and MODIS - to provide accurate wildfire detection. Data that is appropriate for wildfire detection on a global scale is provided by fire products obtained from VIIRS and MODIS imagery.

2.1. Contextual Method based Wildfire Detection System

The contextual method using VIIRS data comprises an initial threshold for identifying the fire pixel, a circumstantial test for confirming fire in likely fire pixel and threshold to turn down false alarms. VIIRS I4 band is the essential driver of the wildfire detection which provides higher performance for the VIIRS 375 m current fire data when compared to the VIIRS 750 m standard fire product utilizing day and night time data. The VIIRS 375 m fire data achieved greater potential with improved consistency than MODIS fire detection data [5]. This work has limitations in showing the difficulty of low confidence with a high rate of wildfire detections. Contextual wildfire detection algorithm has some weaknesses such as determining static threshold for likely fire pixels, disregarding the perceptiveness of test conditions and ignoring small and cold fires. Table 2 shows the comparative analysis of wildfire detection approaches in existing work with the results and limitations.

| Author | Approach | Results | Limitations |

|---|---|---|---|

| Schroeder et al. (2014) [5] | Biomass burning in day and nighttime and thermal anomalies are detected in MODIS fire product using contextual method | commission errors less than 1.2% | Fails to detect small fires |

| Waigl et al. (2017) [6] | VIFDAHL used to identify low and high intensity active fire using Alaska's boreal forest fires 2016 data | Detect more fire pixels for MODIS and VIIRS global fire products | Repeated fire point detections in the same location needs to be focused for further improvement |

| Zhang et al. (2017) [7] | Utilization of I-Band 375 m and 750 m M-Band data for active fire detection and characterization | Provides 5 to 10 times higher fire pixels than MODIS data. Generates reliable FRP records 4 times higher than MODIS | Fails to analysis the fire emissions rate |

| Wang et al. (2020) [8] | A novel method to resample Day-Night band (DNB) pixels to the M-band pixels | Provides higher accuracy during nighttime fire | Fails to compare the results with standard data |

| Fu et al. (2020) [9] | FRP and Fire detection between MYD14 and VNP14IMG are compared by the inspection in MODIS-VIIRS overlapping orbits | VIIRS detect fire pixels by 65% and MODIS detect by 83%. FRP was lower for VIIRS compared to MODIS | False positive alarms needs to analyzed for better improvement |

| Zhang et al. (2021) [10] | Adaptive threshold is selected by bubble sort method based on the small fires radiation characteristics | Accuracy - 53.85% in summer and 73.53% in winter. Overall 18.69% greater accuracy with 28.91% lower error rate | The missing fire points has to be analyzed and verified for better improvement |

| Gong, A et al., 2021 [11] | Spatio-temporal contextual brightness temperature prediction model (STCM) | RMSE is 12.54% lower than that of Contextual method and 9.12% lower than that of Temporal-Contextual method | Fails to detect small fires. |

| Coskuner KA. (2022) [12] | Survey paper for wildfires monitoring | The accuracy of VIIRS lies between 1.3% to 25.6% for different forest land covers | This investigation show that some limitations reside in VIIRS and MODIS active fire products for the wildfires evaluation of less than 10 ha. |

| Firouz Aghazadeh 2023 [13] | Dynamic algorithm | Various threshold based contextual method has been evaluated. IGBP algorithm detects 86% wildfire with a 14% error rate. | The accuracy can be improved further for better performance |

Waigl et al. [6] developed the modified contextual wildfire detection algorithm called as VIIRS I-band Fire Detection Algorithm for High Latitudes (VIFDAHL) using the data obtained during Alaska's boreal wildfires on 2016 fire period. The proposed VIFDAHL algorithm successfully classifies the fire pixels as high or low intensity and also removes the duplicate detections due to the bowtie effect in VIIRS data. This method accurately determined 30–90% more fire pixels in VIIRS global fire products than MODIS.

Zhang et al. [7] presented a VIIRS-IM (combination of 750 m M-Band and 375 m I-Band data) for wildfire detection. Eastern China area was considered for this study and showed that the I-Band VIIRS data facilitates smaller active wildfire detection. The study results are compared with Aqua-MODIS and the existent VIIRS I-Band wildfire detections. VIFDAHL captures the fire distri- bution, accurately classifies high and low intensity fires, and correctly detects 30–90% higher fire pixels in comparison with MODIS data. Proposed VIIRS-IM considered the FRP metrics and experimental results show that the lowest FRP pixels in the agricultural burning area of eastern China.

Fire Radiative Power (FRP) is the release rate of radiative fire energy and has been applied for estimation of fire intensity, calculation of smoke injection height, severity of fire and post-fire forest productivity. FRP can be calculated by using the Eq. (1).

|

(1) |

where L4f and L4b are radiances of fire and background pixels at 4 µm, Afp is the fire pixel area, σ is the Stefan–Boltzmann constant (5.67×10-8 Wm−2 K−4), τ4 is the transmittance of atmospheric at 4 µm, and a is an empirical constant (3.0 ∗ 10−9Wm−2sr−1µm−1k−4).

VIIRS is keen to visible light from wildfires during nighttime. Resampling by pixel radiances of the Day-Night Band (DNB) from M-band footprint pixels to increase nighttime wildfire detection has been presented [8]. A study of the 2018 California Camp Fire has been taken and shown that the method provides higher accuracy during the nighttime fire.

The wildfire detection and performance based on FRP estimation of VIIRS and MODIS fire regimes are analyzed [9]. This study surveyed VIIRS data in Northeastern Asia between 2012–2017 where wildfires and burning of agricultural land are prevalent. Wildfire detection and FRP in MODIS and VIIRS data were compared using the simultaneous observations in the overlapping orbits. The experimental result shows that across land cover categories, the VIIRS is stronger in wildfire detection and high fire omission of MODIS in the area's lands of low-biomass. The detection of false wildfire pixels was lower in VIIRS than in MODIS, but the commission errors were similar. Finally, the low-biomass areas of VIIRS show that is effective for low-intensity wildfire areas, while for high-intensity wildfires MODIS accurately retrieves FRP with dense smoke and solid atmospheric absorption.

In order to overcome the issue of ignoring small fires by contextual method, a new weighted wildfire detection algorithm has been proposed to accurately detect small fires on VIIRS [10]. The mid-infrared channel has been used for wildfire identification on the East Asia region. The crop burning wildfires that occur in East Asia have been detected by adaptive threshold using bubble sort method based on the small fires radiation characteristics. The results show that the accuracy value of 53.85% in summer and 73.53% in winter. However, the missing VIIRS wildfire points have to be analyzed.

Spatio-temporal contextual brightness temperature prediction model (STCM) has also been proposed for wildfire detection. San Diego in Southern California, America has been surveyed for this research study and experimental results show that RMSE is 12.54% lower than that of contextual method and 9.12% lower than that of Temporal-Contextual method. This method uses MODIS products to detect wildfire detection, but it fails to discuss small fires in the wildfire [11].

The performance of MODIS and VIIRS wildfire detection in various land covers has been evaluated in Turkey on the fire data from 2015 and 2019 [12]. The VIIRS achieves accuracy from 1.3% to 25.6% for five various land cover types like herbaceous vegetation, croplands, closed and open forests and shrublands. However, MODIS and VIIRS have limitations of wildfire detections especially less than 10 ha.

The wildfire detection in the Kayamaki Wildlife study area has also been sureveyed using contextual based algorithms. This method analyzed various contextual based algorithms like IGBP, Giglio, Extended, Dynamic, and Giglio and Extended on MODIS data and IGBP algorithm detects 86% wildfire with a 14% error rate. The accuracy can be improved further for the better performance.

The statistical data of the surrounding areas has mostly influenced the use of contextual approaches in wildfire detection. Nevertheless, the threshold may vary depending on the type of land cover, the seasons, and the climate. The performance of the wildfire detection system is impacted by clouds and thick smoke, making it susceptible to omissions and false positives.

2.2. Machine Learning based Wildfire Detection System

Nowadays, Machine Learning (ML) techniques are often useful for finding relationships, detecting and predicting wildfire growth. Using ML algorithms for detecting, rapid monitoring of active fires, and early prediction of active fires could help the current wildfire detection system for further improvement [14].

Muhammad et al. [15] proposed a CNN to produce appropriate images of the fire using surveillance videos. Still, these methods give less performance for huge area wildfire detection. Ba et al. [16] proposed a SmokeNet to improve the scene classification by combining the space and channel attention direction into CNN. The experi- mental results indicate the proposed method classifies smoke scenes based on MODIS data. Gargiulo et al. [17] designed the data fusion method on CNN for wildfire detection of Sentinel-2 images. The precision and recall rate of the proposed method is 84.14% and 56.42% respectively. In this method, the weights of the CNN have fine-tuned based on the geographic study area (Vesuvius, Italy).

Pinto et al. [18] utilized the U-net architecture with Long Short-Term Memory (LSTMs) and CNN in VIIRS 750 m bands data. This model uses VIIRS 750 m Red, middle-infrared (MIR), near-infrared (NIR) bands with VIIRS 375 m active fires for training the model. The authors studied regions of Brazil, California, Portugal, Australia and Mozambique with accuracy of 90.6%. Additionally, Bushnaq et al. [19] used Unmanned Aerial Vehicle (UAV) images or videos to detect wildfires. Rashkovetsky et al. [20] investigated fire-affected areas from satellite image of the infrared, visible and microwave domains. CNN based U Net architecture has been considered with multisensory data (Sentinel-1, Sentinel-2, Sentinel-3 and MODIS) for detecting wildfires. Combination of Sentinel-2 and Sentinel-3 data achieved highest accuracy of 96% in cloudy condition.

Khan, Somaiya, and Ali Khan [21] demonstrated the FFireNet for discriminating fire and non-fire images using MobileNetV2 model. The experimental results show that, 99.47% of recall, 98.42% of accuracy, 1.58% of error rate and 97.42% of precision for the dataset generated by intelligence surveillance system. Ahmed S. Almasoud [22]. combined DL with Computer Vision technology for detecting and monitoring wildfires using IWFFDA-DL model (Intelligent Wild Forest Fire Detection and Alarming system DL). CNN with BLSTM (Bidirectional Long Short Term Memory) for detecting fires and bacterial foraging optimization (BFO) algorithm has been used for enhancing the detection performance. The result analysis showed that the precision of 97.88%, recall of 99.46%, accuracy of 99.56%, F-score of 98.65% and MAE of 1.36% with globally collected fire dataset with condition of smoke less than 50 dB/m, normal fire status, heat value is less than 55°C and flame less than 180 μm.

Abdusalomov et al. [23] proposed an Detectron2 Model with precision of 99.3% for the large custom dataset to address the challenges of day and night fire, light and shadows in the images. Thangavel et al. [24] studied one dimensional CNN using PRISMA hyperspectral imagery to detect wildfires in Australian region and accuracy of the system achieved 97.83% for the validation dataset.

The determination of fire incidents and hotspot actions within the four national parks in Thailand has also been studied using MODIS data. This paper suggests the early detection of forest and land fires through the implementation of Artificial Intelligence (AI) [25]. However, this study aimed to analyze and monitor the effective wildfire detection system.

Priya et al. [26] demonstrated the use of ML to detect vegetation changes and evaluate recovery using VIIRS data based on post-fire satellite imagery with a training error of 0.075.

There are still certain unresolved research issues from the talks above. The literature review revealed a research gap in contextual techniques, where the threshold may change depending on the kind of land cover and the various climate and seasonal factors. As a result, this approach is easily affected by clouds and dense smoke and is susceptible to omissions and false positives. Since ML based techniques have significant adaptability, learning capacity, and superior portability, they offer high accuracy and minimal false alarms [27]. It is still difficult to accurately detect wildfires, and current techniques for doing so with remote sensing sensor data need to be improved [28].

3. METHOD

3.1. Proposed Wildfiredetect CNN Model

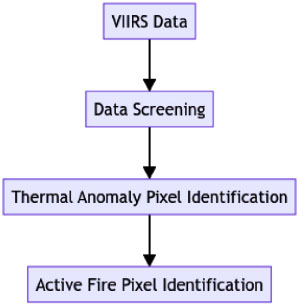

The steps for detecting wildfire pixels using VIIRS data are shown in Fig. (1).

Procedure for detecting wildfires using VIIRS data.

Wildfire detection algorithm has the following major steps:

3.1.2. Data Screening

Unwanted pixels, such as those representing water bodies or stripes, are filtered out.

3.1.3. Thermal Anomaly Identification

Candidate thermal anomaly pixels are identified by comparing surrounding pixels that might indicate a fire.

3.1.4. Fire Pixel Detection

Potential fire pixels are detected using specialized wildfire detection algorithms.

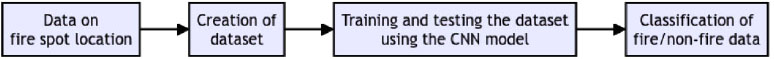

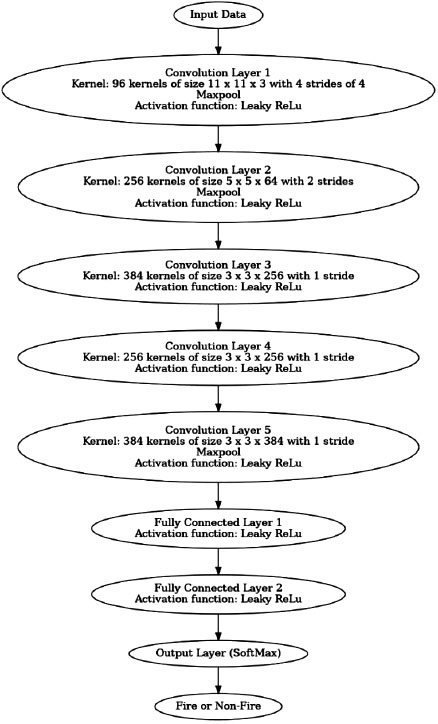

Workflow of wildfiredetect CNN model.

Wildfiredetect CNN model framework.

Wildfiredetect CNN model is proposed to improve the accuracy of wildfire detection using VIIRS data. When compared to contextual methods, VIIRS data can easily eliminate false positives and omission errors and give high resolution for the easy detection of fire points.

Fig. (2) shows the workflow of the Wildfiredetect CNN model. From fire spot location data from VIIRS fire product data, the position and time of the data is taken and a grid of 5 × 5 sizes is formed at the centre of each pixel. Surrounding information of the pixels is calculated by the average and standard deviation of each grid band. In the training data set, the 1:2 ratio of fire and non-fire training points were considered. The characteristics of the fires were learned by the network fully and classified correctly according to the data. In this study, data of fire and non-fire spots are randomly added to the training set and 15% is considered as the validation set for testing the accuracy. The algorithm has a feature extraction method and a classification method. The feature extraction method extracts features from the input data and fully connected layer output the spot is a fire or non-fire.

Fig. (3) shows the proposed Wildfiredetect CNN model. The proposed model consists of 5 convolutional layers, 5 Maxpool layers and 2 fully connected layers to extract features. First convolution layer has 96 kernels of size (11 × 11 × 3) and a stride of 4 pixels followed by Maxpool layer to reduce data’s complexity, second convolution layer has the 256 kernels of size (5 × 5 × 64) with a stride of 2 followed by second Maxpool layer, third convolution layer has 384 kernels of size (3 × 3 × 256) without a pooling procedure and the rest of the convolution layers use filters with kernels of 384 and 256, respectively with a stride of 1. The pooling layer uses 3×3 filters. Finally, 2 fully connected layers and the output layer has Softmax function to distinguish fire/non-fire. In addition to achieving non-linearity in neural networks, the variation of ReLu is the leaky ReLu activation function used, which introduces a small positive slope for negative inputs for addressing the problem of “dead neurons” in ReLu activation function.

4. STUDY AREA

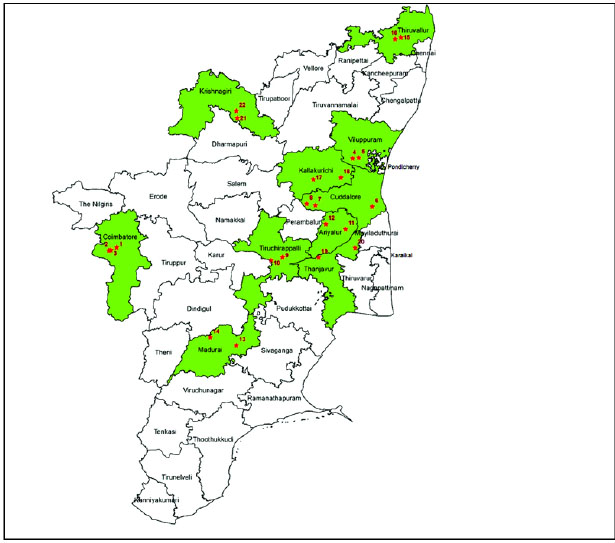

Tamil Nadu is the southernmost portion of India. A geographical area of Tamil Nadu is 1,30,060 sq km. This state ranges are 8°05'N to 13°35'N latitude and 76°15'E to 80°20'E longitude.

The state forest area (22,877 sq km) is classified into protected forest (1,782 sq km), reserved forest (20,293 sq km) and unclassed forests (802 sq km). According to forest canopy density classes, 3,605.49 sq km has very dense forest, 11,029.55 sq km has moderately dense forest and 11,728.98 sq km has open forest. The topographic map of Tamil Nadu is shown in Fig. (4).

Topographic map of tamil nadu.

State Forest Department receives Near Real-Time (NRT) active wildfire data from Fire Information for Resource Management System (FIRMS) in less than 3 hours of satellite overpass from VIIRS. The data includes centre of pixel geo-coordinates, date, satellite overpass time, satellite name etc., for the wildfire hotspots. Active fire detection provides the centre of a 1 km pixel pointed as one or more fires in VIIRS 375m pixel. State forest department identifies the forest division and then communicates it to the concerned District Forest Officer for necessary action.

In India, 52,785 active fires were detected by MODIS and 3,45,989 active fires were detected by VIIRS in the year 2020-2021. Tamil Nadu state reports 202 active fires by MODIS and 1220 active fires by VIIRS in the year 2020-2021. Daily VIIRS 375 m data fire dataset has been used to understand the study area, which was collected from NASA (FIRMS) website (https://earthdata.nasa.gov) [29] for the period 2012 to 2020. This research study focuses on a wildfire detection system using the proposed Wildfiredetect CNN model in VIIRS Landsat 8 satellite imageries data, to fill this research area gap.

5. RESULT AND DISCUSSION

In this study, the DL framework Pytorch1.3 was used. The active fire locations of India have been obtained from Landsat-8 datasets. In this research 1000 samples have been created with 256 ×256 pixels for 600 training samples (60%), 300 testing samples (30%) and 100 validating samples (10%). The experiments were performed on 8 GB of RAM, Intel Core i5-8300H CPU and NVIDIA GeForce GTX 1060. The Wildfiredetect CNN model has a parameter value of 100 epoch level, batch size of 100 with 10-3 learning rate. The parameter settings of the Wildfiredetect CNN model for the study area are shown in Table 3. The Wildfiredetect CNN model is trained though Adaptive Moment Estimation (Adam) optimizer [30] using back propagation and the weights were initialized by Golorot initializer [31].

| Parameter | Value |

|---|---|

| Training | Backpropagation |

| Initial weights | Golorot initializer |

| Batch size | 100 |

| Number of Epochs | 50 |

| Learning rate | 10-3 |

| Activation function of hidden layer | Leaky Relu |

| Training samples | 600(60%) |

| Testing samples | 300(30%) |

| Validating samples | 100(10%) |

5.1. Evaluation Metrics

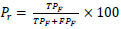

The performance metrics like Recall Rate (Rr), Precision Rate (Pr), Commission Error (Ce), F-measure (Fm) Accuracy (AC) and Omission Error (Oe) were considered to test the proposed Wildfiredetect CNN model performance. Precision Rate (Pr) declares that the truly predicted fire spots of this model and is calculated by using Eq. (2).

|

(2) |

TPF denotes correctly classified fire spots, TNF denotes false fire spots, FPF denotes pixels misclassified as fire spots, FNF represents inaccurately classified as non-fire spots. If the reliability of the predicted model is higher, then the PR value is higher.

The Ce denotes the number of the predicted fire spots is wrong. The higher Ce value shows the wrong fire spots recognized by the model and is calculated by using Eq. (3).

|

(3) |

The Rr defines the fire spots correctly identified in the model. If the Rr value is greater, the lesser the fire spots skipped and is calculated by using Eq. (4).

|

(4) |

The Oe shows that the original data fire spot and the extent to it is missed out. The higher the Oe value model, the lower the comprehensiveness. The Oe value is calculated by using Eq. (5).

|

(5) |

The AC is calculated by the ratio of correct predictions to the model predictions and is calculated by using Eq. (6).

|

(6) |

Fm value evaluates the performance of the model and is calculated by using Eq. (7).

|

(7) |

5.2. Experiment Result and Analysis

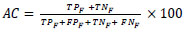

Fig. (5) shows the result of the proposed Wildfiredetect CNN model in the Tamil Nadu state region. The proposed Wildfiredetect CNN model is compared with two types of algorithms such as contextual algorithms and ML based algorithms. The existing method based on these algorithms has been used for testing the proposed method efficiency. Table 4 shows the comparison of an existing method such as contextual and ML based algorithms with the proposed Wildfiredetect CNN model.

From Table 4, it is observed that the contextual algorithms and an improved Contextual algorithm achieve low accuracies compared to the ML based algorithms. These methods have shown lower performance for detecting active fires when compared to other methods for the use of threshold methods to find the active fires in the dataset. The proposed Wildfiredetect CNN model achieves 26.17% higher accuracy rate than the improved contextual algorithm. Also compared to other ML algorithms, proposed Wildfiredetect CNN model gives higher accuracy in wildfire detection system and it is shown in Fig. (6).

Result of proposed model for sample images.

Accuracy comparison of wildfire detection algorithms.

| Method | Accuracy (%) |

|---|---|

| Contextual algorithms | |

| VIFDAHL [6] | 95 |

| Optimised I M Band algorithm [7] |

96 |

| Contextual algorithm [9] |

91 |

| Improved Contextual algorithm [10] | 73.53 |

| Contexual algorithm [12] |

92.3 |

| ML algorithms | |

| CNN+ LSTM + U-Net [18] |

99 |

| CNN+ Super resolution [17] |

97.4 |

| Proposed Wildfiredetect CNN model | 99.7 |

| Method | TPF | TNF | FPF | FNF | Pr (%) | Rr (%) | Ce (%) | Oe (%) | AC (%) | Fm (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Proposed Wildfire CNN Model | 977 | 18 | 2 | 3 | 99.79 | 99.69 | 0.2 | 0.3 | 99.7 | 99.73 |

The performance metrics like Pr, Rr, Ce, Oe, AC and Fm were used as the indicators of the proposed Wildfiredetect CNN model performance and the results are shown in Table 5. The proposed Wildfiredetect CNN model achieves the highest accuracy score of 99.7% with a lower omission error of 0.3%.

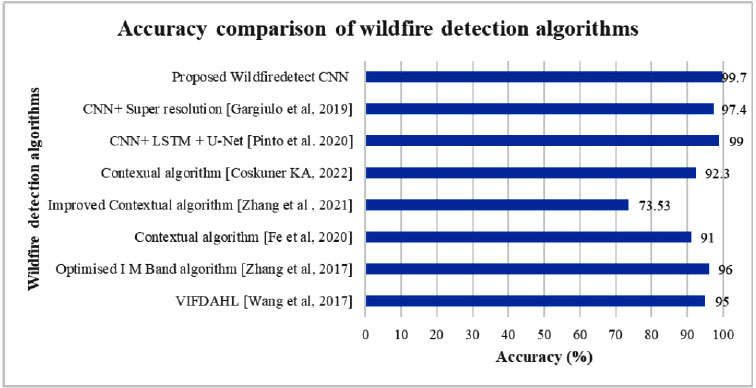

Confusion matrix of the proposed model for the testing data.

| Method | TPF | TNF | FPF | FNF | Pr (%) | Rr (%) | Ce (%) | Oe (%) | AC (%) | Fm (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Proposed Wildfire CNN Model | 277 | 18 | 4 | 1 | 98.5 | 99.64 | 1.42 | 0.35 | 98.3 | 99.1 |

Confusion matrix gives the predictive analysis of the wildfire dataset classification for the study area. The proposed model shows good results for the wildfire classification problem. The proposed model classifies the fire spot with 99.79% Pr, 99.69% Rr, 0.2% Ce, 0.3% Oe, 99.7% AC and 99.73% Fm values, respectively for training data. Confusion matrix of the proposed model for the testing data is shown in Fig. (7). The proposed results in 227 TPF and 18 TNF while the wrong classified active fire spots are 4 FPF and 1 FNF, respectively for testing data as shown in Table 6.

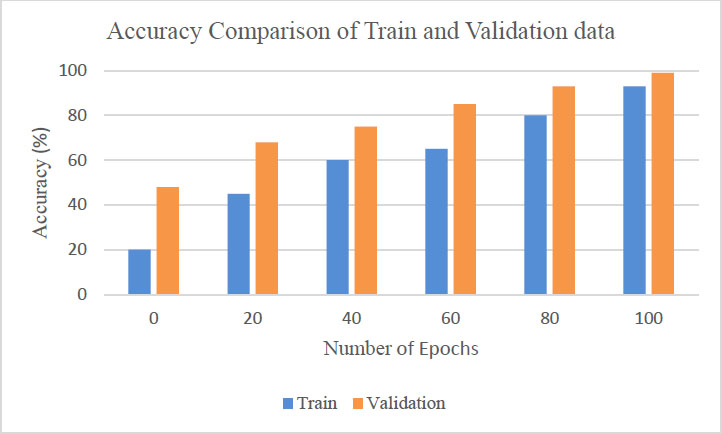

Fig. (8) depicts the training and validation accuracy analysis of proposed model. The model runs for 100 epochs and the accuracy values of each epoch are analyzed for the training and validating data on the dataset. The experimental results show that the highest accuracy of 98.3% is achieved at 100 epochs.

5.3. Experiment Result Analysis on Alaska Forests Dataset

In this section, the proposed model is tested with Alaska forest datasets to prove its effectiveness in detecting small fires.

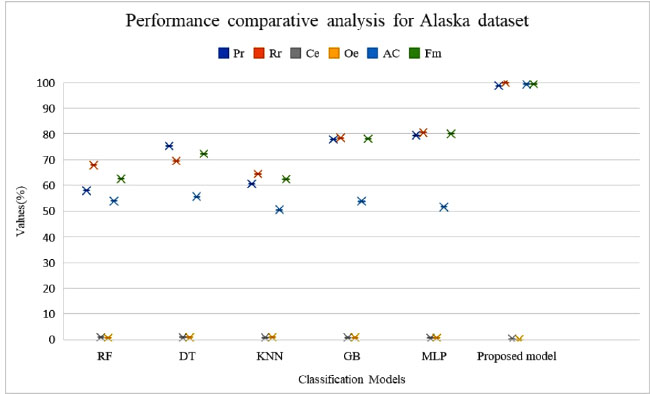

Coffield et al. [32] used machine learning models to predict the small fires in substantial occurrence of wildfires in Alaska forests. Decision trees achieve higher accuracy in detecting small fires in the study area when compared to other machine learning models such as Random Forests (RF), K-Nearest Neighbours (KNN), Gradient Boosting (GB) and Multi-Layer Perceptrons (MLP). The active fire data was collected from MODIS sensor over the year 2001–2017 and it contains fire spots from upto 1168. The Alaska dataset was considered to test the performance of the Wildfiredetect CNN model. The proposed model achieves 99.4% accuracy which is higher than the machine learning models such as RF, DT, KNN, GB and MLP. The proposed model accuracy for small fires in the Alaska forests are 45.3%, 43.6%, 48.7%, 45.4% and 47.6% higher than RF, KNN, GB and MLP models respectively. Fig. (9) depicts the overall performance evaluation metrics comparison of various classification models with the proposed model for the Alaska dataset [33-40].

Analysis of training and validation accuracy of proposed model.

Performance comparison of various models for alaska dataset.

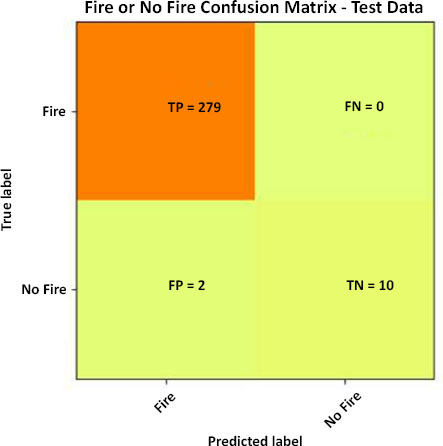

In this research, 582 training samples (50%), 291 testing samples (25%) and 291(25%) validating samples (10%) were considered to prove the proposed Wildfire CNN model performance on Alaska dataset by considering the six quality indices such as Pr, Rr, Ce, Oe, AC and Fm. Table 7 shows the performance comparison of the proposed model with various existing ML algorithms. The proposed model higher performance in detecting active fire spot and achieves lower error rate for effective fire spot detection. Table 8 shows that the performance metrics result of the proposed model for testing data in Alaska dataset. The proposed model achieves 99.2% Pr, 100% Rr, 0.7% Ce, 0% Oe, 99.3% AC and 99.69% Fm values, respectively.

Fig. (10) depicts the Confusion matrix of the proposed model for the testing data in Alaska dataset. The proposed model results in 279 TPF and 10 TNF while the wrong classified active fire spots are 2 FPF and 0 FNF, respectively.

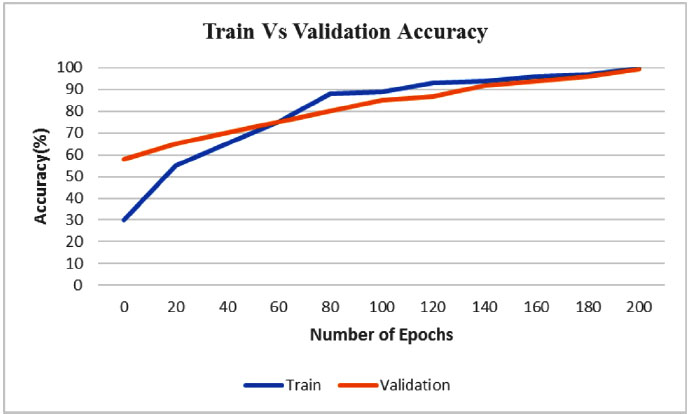

The experimental analysis provides the effectiveness of the proposed model for detecting wildfires in the dataset. The proposed model also demonstrates that accurately detecting small fires in Alaska forests dataset when comparing other ML algorithms. Fig. (11) depicts the training and validation accuracy analysis of the proposed model. The model runs for 200 epochs and the accuracy values of each epoch are analyzed for the both training and validating data on the Alaska dataset. The analysis on epochs shows that, at 200 epochs the highest accuracy value of 99.3% achieved.

| Method | Pr (%) | Rr (%) | Ce (%) | Oe (%) | AC (%) | Fm (%) |

|---|---|---|---|---|---|---|

| RF | 57.95 | 67.87 | 0.89 | 0.72 | 53.9 | 62.51 |

| DT | 75.34 | 69.45 | 0.86 | 0.82 | 55.6 | 72.28 |

| KNN | 60.61 | 64.34 | 0.81 | 0.88 | 50.5 | 62.41 |

| GB | 77.83 | 78.45 | 0.78 | 0.78 | 53.8 | 78.13 |

| MLP | 79.45 | 80.56 | 0.56 | 0.64 | 51.6 | 80 |

| Proposed Wildfiredetect CNN Model | 98.74 | 100 | 0.25 | 0 | 99.3 | 99.36 |

| Method | TPF | TNF | FPF | FNF | Pr (%) | Rr (%) | Ce (%) | Oe (%) | AC (%) | Fm (%) |

|---|---|---|---|---|---|---|---|---|---|---|

| Proposed Wildfire CNN Model | 279 | 10 | 2 | 0 | 99.2 | 100 | 0.7 | 0 | 99.3 | 99.69 |

Confusion matrix of the proposed model for the testing data in Alaska dataset.

Training and validation accuracy analysis of proposed Wildfire CNN model in Alaska dataset.

CONCLUSION

Determining the wildfire's occurrence accurately is difficult yet essential. The threshold fluctuates depending on the research locations and climate change, making the widely used contextual technique vulnerable to large commission and omission errors. Very few studies use VIIRS data to apply ML techniques for wildfire detection. This paper proposes a Wildfiredetect CNN for wildfire detection using VIIRS data that is effective in detecting wildfires while taking the Tamil Nadu study region into consideration. To estimate the effectiveness of the proposed model, performance metrics like Pr, Rr, Ce, Oe, AC and Fm were considered. The result of the proposed model has been compared with the contextual method and demonstrates that the proposed model achieves the highest performance metric values with 99.69% recall rate, 99.79% precision rate, 0.3% omission error, 0.2% commission error, 99.73% F-measure and 99.7% accuracy values for training data. The proposed Wildfiredetect CNN model also achieves a 26.17% higher accuracy rate than the improved contextual algorithm. The proposed model detects wildfire superior to the existing algorithms and the false rate also significantly decreased. The experiment analysis on the Alaska forests dataset also proves that the proposed model detects small fires accurately when compared to existing ML algorithms like RF, KNN, GB and MLP and shows the results for the testing data with a 100% recall rate, 99.2% precision rate, 0% omission error, 0.7% commission error, 99.69% F-measure and 99.3% accuracy values. Ultimately, more research remains necessary for wildfire detection and monitoring systems that combine ML algorithms with satellite remote sensing technology.

AUTHORS' CONTRIBUTION

It is hereby acknowledged that all authors have accepted responsibility for the manuscript's content and consented to its submission. They have meticulously reviewed all results and unanimously approved the final version of the manuscript.

LIST OF ABBREVIATIONS

| MODIS | = Moderate Resolution Imaging Spectroradiometer |

| VIIRS | = Visible Infrared Imaging Radiometer Suite |

| SNPP | = Suomi National Polar orbiting Partnership |

AVAILABILITY OF DATA AND MATERIALS

The datasets used in this research work are available in the repository NRT VIIRS 375 m Active Fire product VNP14IMGT, Alaska Dataset at, https://earthdata.nasa. gov/ firms.